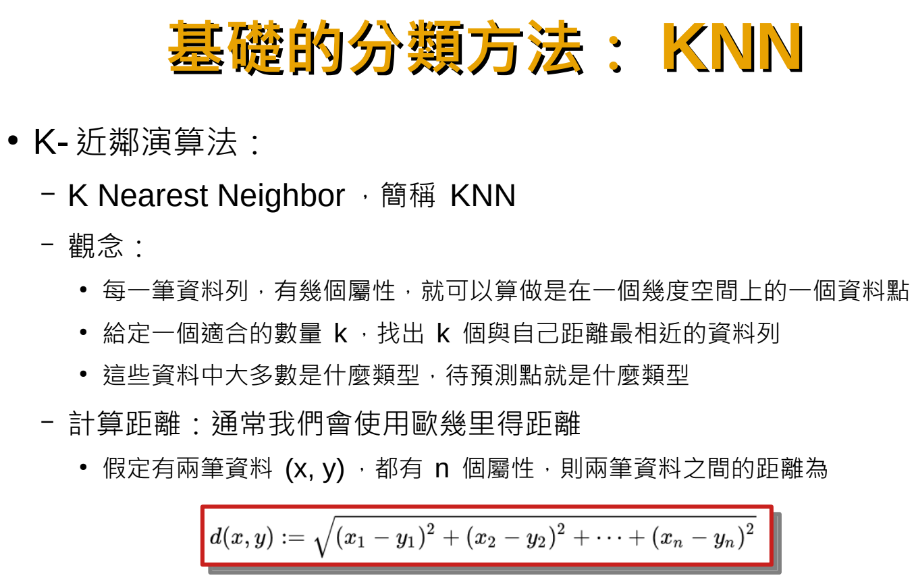

K-近鄰演算法(K Nearest Neighbor ,簡稱 KNN):

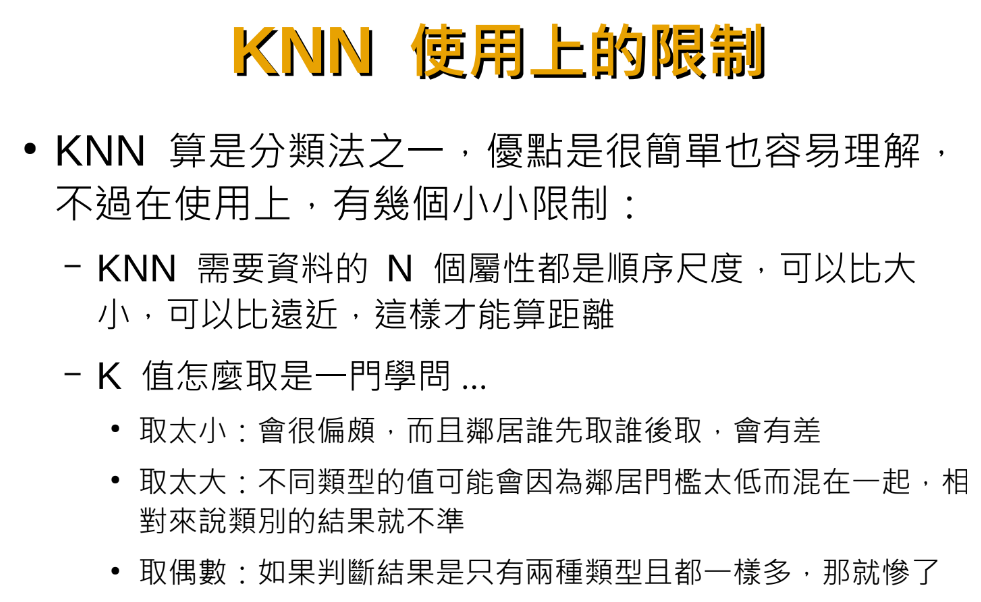

KNN 需要資料的 N 個屬性都是順序尺度,

可以比大小,可以比遠近,這樣才能算距離

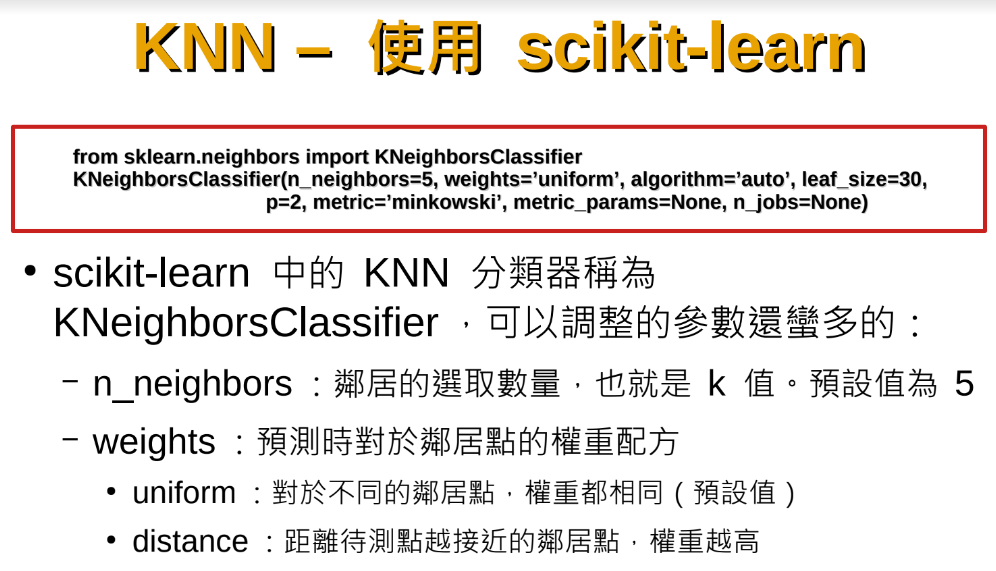

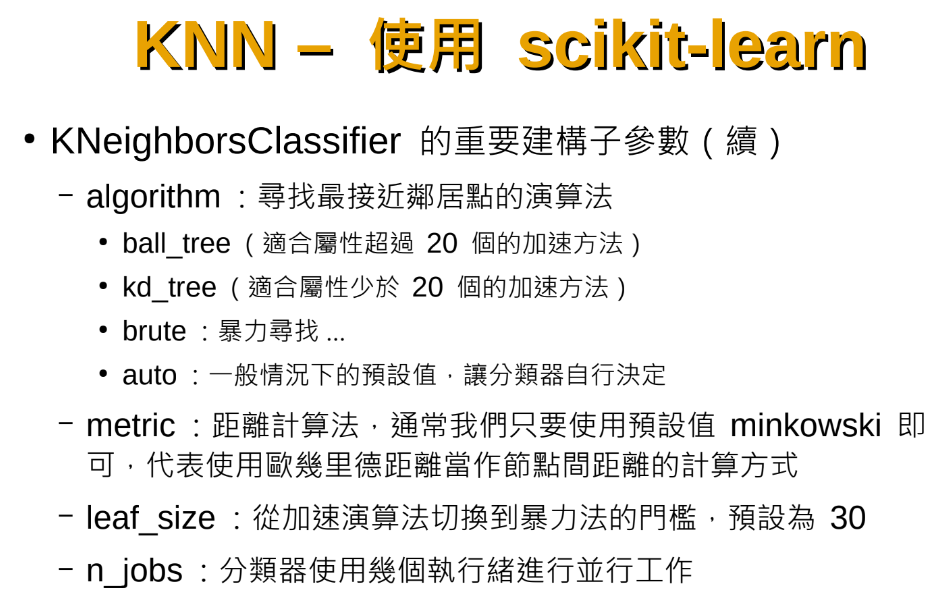

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

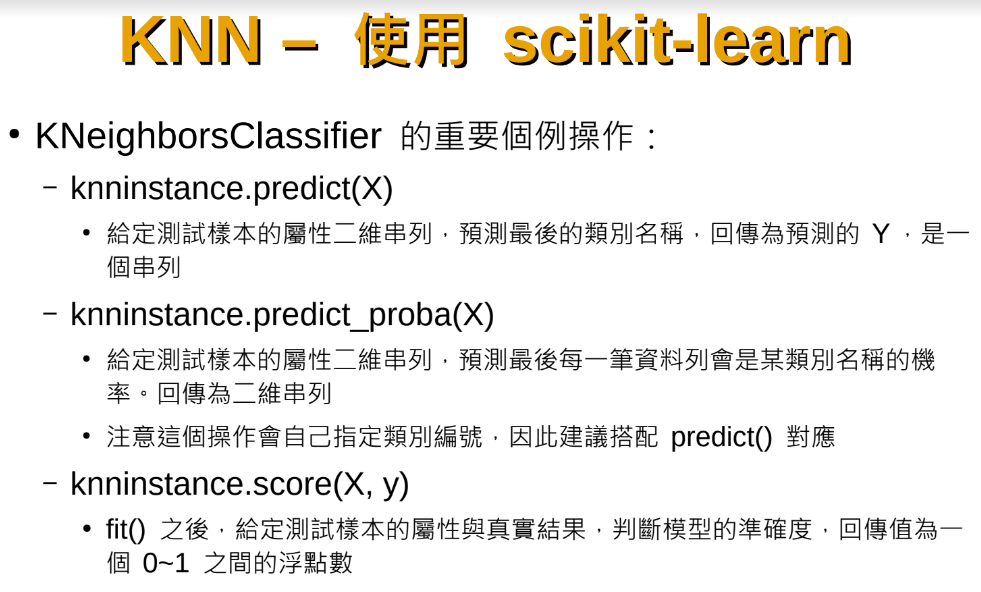

sklearn

predict

predict_proba

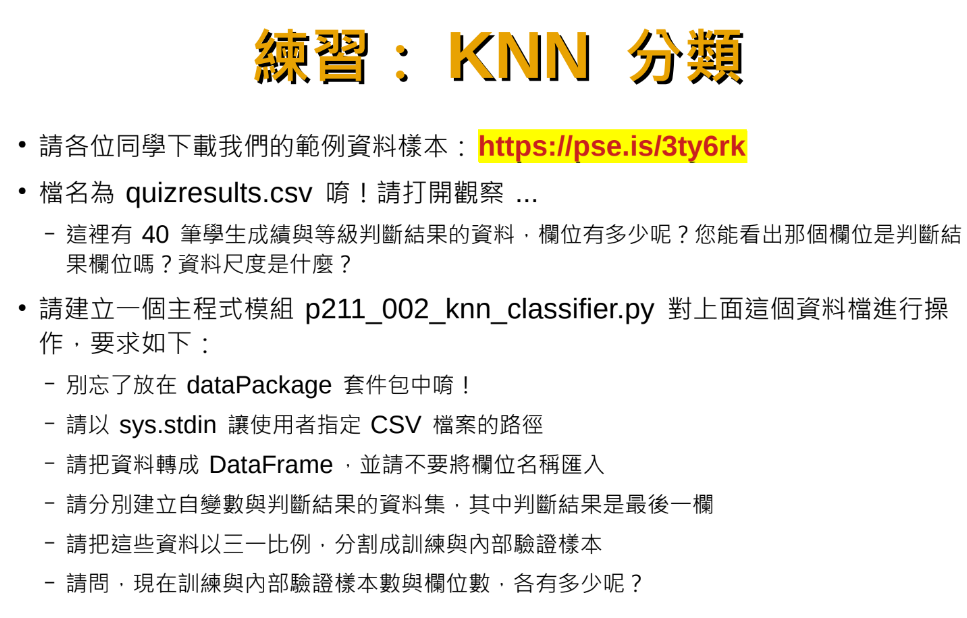

部分資料:

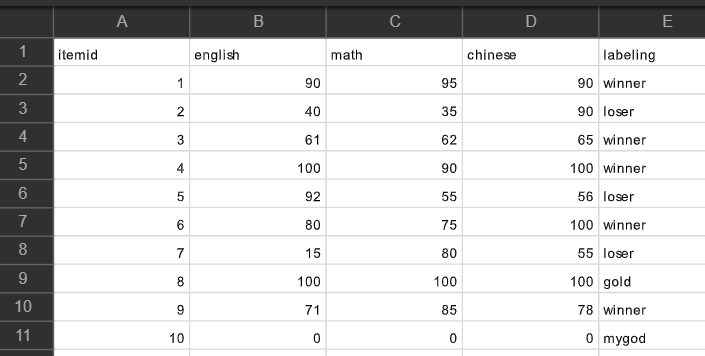

import pandas as pd

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

folder = “C:\Python\P107\doc”

fname = “student.csv”

import os

fpath = os.path.join(folder,fname)

# fpath = folder + “\\” + fname #同義

df = pd.read_csv(fpath,header=None,skiprows=[0])

df.to_excel(os.path.join(folder,”knsDF.xlsx”))

#df.index.size = 40 #df.columns.size = 5

X = df.drop([4],axis=1).values

#drop([0,4])可以提高score

#第0欄類似index, 非資料

#score仍勝出KNN去掉第0欄

y = df[4].values

Xtrain, Xtest, ytrain, ytest =\

train_test_split(X,y,test_size=0.25,

random_state= 42 ,shuffle =True)

XtestDF = pd.DataFrame(Xtest)

XtestDF.to_excel(os.path.join(folder,”knsXtest.xlsx”))

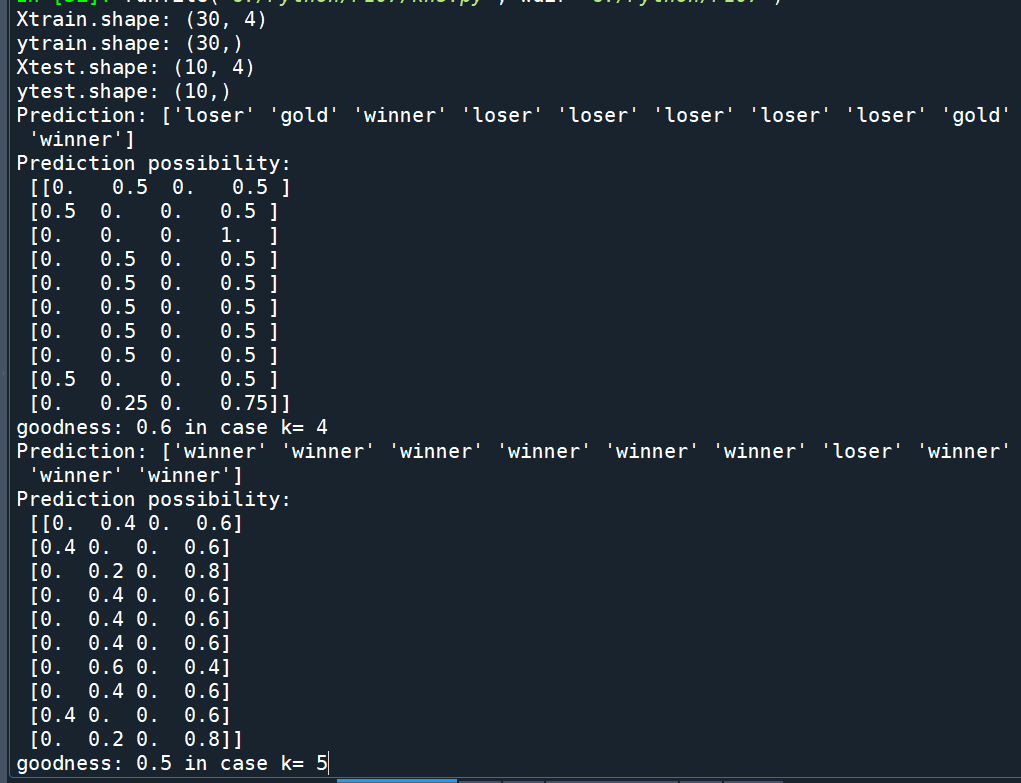

print(“Xtrain.shape:”,Xtrain.shape) #(30, 3)

print(“ytrain.shape:”,ytrain.shape) #(30,)

print(“Xtest.shape:”,Xtest.shape) #(10, 3)

print(“ytest.shape:”,ytest.shape) #(10,)

for k in range(4,11):

kns = KNeighborsClassifier(n_neighbors=k)

kns.fit(Xtrain,ytrain)

ypred = kns.predict(Xtest)

ypred2 = kns.predict_proba(Xtest)

print(“Prediction:”,ypred)

print(“Prediction possibility:\n”,ypred2)

howgood = kns.score(Xtest,ytest)

print(“goodness:”,howgood,”in case k=”,k)

部分輸出結果:

![Python: pandas.DataFrame如何移除所有空白列?if df_raw.iloc[r,0] is np.nan: nanLst.append(r) ; df_drop0 = df_raw.drop(nanLst,axis=0) ; pandas.isna() ;df_drop0 = df_raw.drop(nanLst,axis=0).reset_index(drop=True) Python: pandas.DataFrame如何移除所有空白列?if df_raw.iloc[r,0] is np.nan: nanLst.append(r) ; df_drop0 = df_raw.drop(nanLst,axis=0) ; pandas.isna() ;df_drop0 = df_raw.drop(nanLst,axis=0).reset_index(drop=True)](https://i2.wp.com/savingking.com.tw/wp-content/uploads/2022/12/20221206144233_67.png?quality=90&zoom=2&ssl=1&resize=350%2C233)

![Python Pathlib 實戰:優雅地篩選多種圖片檔案; images = [f for f in p.glob("*") if f.suffix.lower() in img_extensions] - 儲蓄保險王](https://savingking.com.tw/wp-content/uploads/2026/01/20260128111659_0_736612-520x245.png)

近期留言