參考前篇: 如何串接Meta API ?

list 要從生成的json與jieba斷詞套件取得

以及: 全文件詞頻(term frequency,簡稱TF)計算

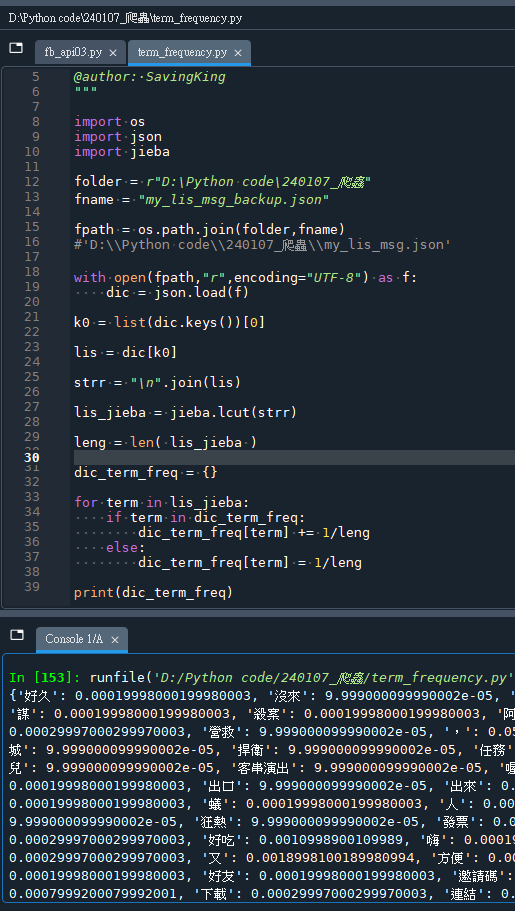

code:

# -*- coding: utf-8 -*-

"""

Created on Mon Jan 15 06:30:23 2024

@author: SavingKing

"""

import os

import json

import jieba

folder = r"D:\Python code\240107_爬蟲"

fname = "my_lis_msg_backup.json"

fpath = os.path.join(folder,fname)

#'D:\\Python code\\240107_爬蟲\\my_lis_msg.json'

with open(fpath,"r",encoding="UTF-8") as f:

dic = json.load(f)

k0 = list(dic.keys())[0]

lis = dic[k0]

strr = "\n".join(lis)

lis_jieba = jieba.lcut(strr)

leng = len( lis_jieba )

dic_term_freq = {}

for term in lis_jieba:

if term in dic_term_freq:

dic_term_freq[term] += 1/leng

else:

dic_term_freq[term] = 1/leng

print(dic_term_freq)輸出結果:

推薦hahow線上學習python: https://igrape.net/30afN

出現最多的是那些詞?

詞頻多少?

# -*- coding: utf-8 -*-

"""

Created on Mon Jan 15 06:30:23 2024

@author: SavingKing

"""

import os

import json

import jieba

folder = r"D:\Python code\240107_爬蟲"

fname = "my_lis_msg_backup.json"

fpath = os.path.join(folder,fname)

#'D:\\Python code\\240107_爬蟲\\my_lis_msg.json'

with open(fpath,"r",encoding="UTF-8") as f:

dic = json.load(f)

k0 = list(dic.keys())[0]

lis = dic[k0]

strr = "\n".join(lis)

lis_jieba = jieba.lcut(strr)

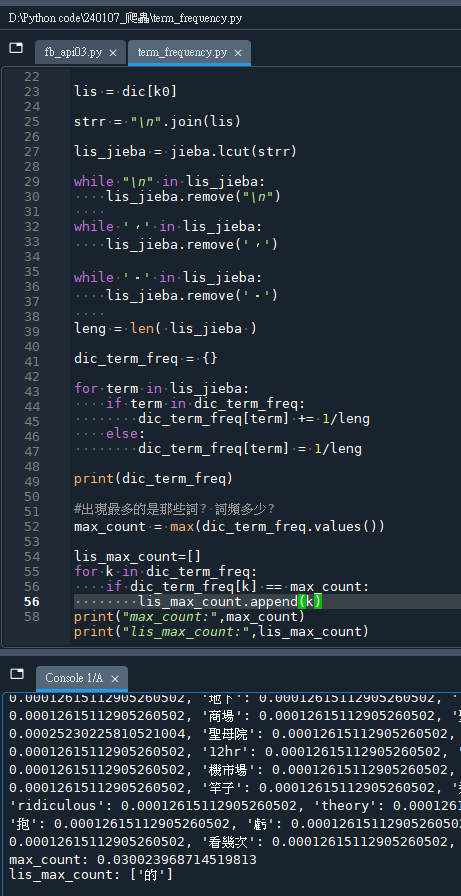

while "\n" in lis_jieba:

lis_jieba.remove("\n")

while ',' in lis_jieba:

lis_jieba.remove(',')

while '。' in lis_jieba:

lis_jieba.remove('。')

leng = len( lis_jieba )

dic_term_freq = {}

for term in lis_jieba:

if term in dic_term_freq:

dic_term_freq[term] += 1/leng

else:

dic_term_freq[term] = 1/leng

print(dic_term_freq)

#出現最多的是那些詞? 詞頻多少?

max_count = max(dic_term_freq.values())

lis_max_count=[]

for k in dic_term_freq:

if dic_term_freq[k] == max_count:

lis_max_count.append(k)

print("max_count:",max_count)

print("lis_max_count:",lis_max_count)輸出結果:

詞頻最高的是”\n”

去掉”\n”後

變成逗點

去掉逗點後

變成句點

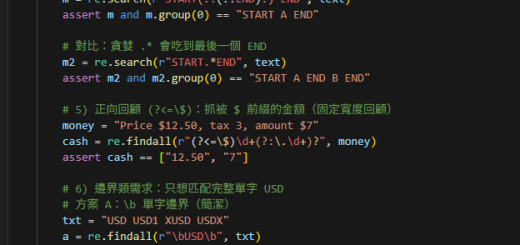

需要使用IDF(Inverse Document Frequency)

濾掉詞頻高

但是卻不重要的詞

簡單點的話,

則可以挑選長度>=2的詞

推薦hahow線上學習python: https://igrape.net/30afN

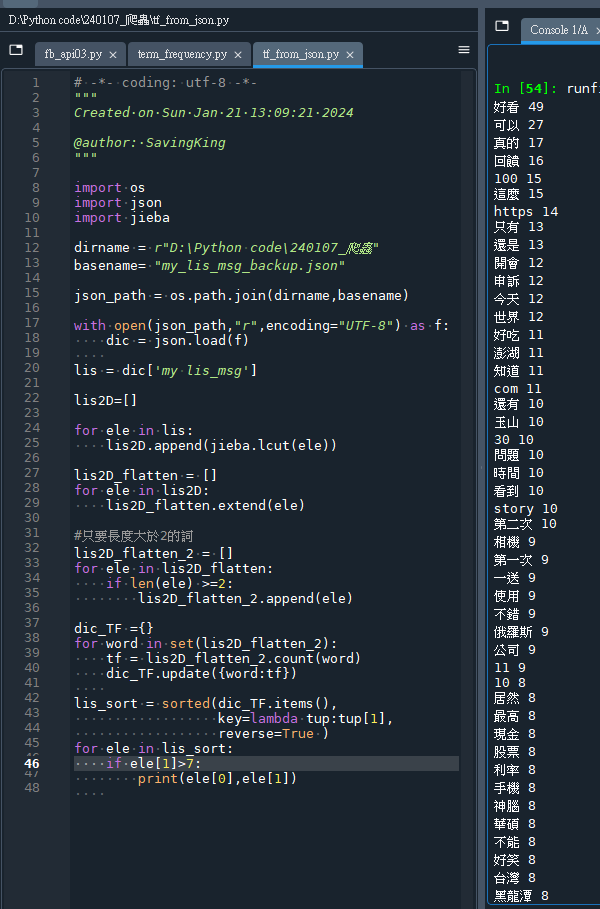

使用list.count(str)

取代以下語法得到的dic_TF

for term in lis_jieba:

if term in dic_term_freq:

dic_term_freq[term] += 1/leng

else:

dic_term_freq[term] = 1/lenglist.count(str)

code:

# -*- coding: utf-8 -*-

"""

Created on Sun Jan 21 13:09:21 2024

@author: SavingKing

"""

import os

import json

import jieba

dirname = r"D:\Python code\240107_爬蟲"

basename= "my_lis_msg_backup.json"

json_path = os.path.join(dirname,basename)

with open(json_path,"r",encoding="UTF-8") as f:

dic = json.load(f)

lis = dic['my lis_msg']

lis2D=[]

for ele in lis:

lis2D.append(jieba.lcut(ele))

lis2D_flatten = []

for ele in lis2D:

lis2D_flatten.extend(ele)

#只要長度大於2的詞

lis2D_flatten_2 = []

for ele in lis2D_flatten:

if len(ele) >=2:

lis2D_flatten_2.append(ele)

dic_TF ={}

for word in set(lis2D_flatten_2):

tf = lis2D_flatten_2.count(word)

dic_TF.update({word:tf})

lis_sort = sorted(dic_TF.items(),

key=lambda tup:tup[1],

reverse=True )

for ele in lis_sort:

if ele[1]>7:

print(ele[0],ele[1])輸出結果

推薦hahow線上學習python: https://igrape.net/30afN

![Python: 如何用numpy.ndarray的reshape 將3D array轉為2D array,再轉為pandas.DataFrame? arr.reshape( arr.shape[0] * arr.shape[1] , -1) Python: 如何用numpy.ndarray的reshape 將3D array轉為2D array,再轉為pandas.DataFrame? arr.reshape( arr.shape[0] * arr.shape[1] , -1)](https://i2.wp.com/savingking.com.tw/wp-content/uploads/2023/03/20230320082325_85.png?quality=90&zoom=2&ssl=1&resize=350%2C233)

![Python-docx 圖片提取完全指南:從 rId 到二進位資料的探險rid ; part = doc.part.rels[rid].target_part #return part.blob if “ImagePart” in type(part).__name__ else None Python-docx 圖片提取完全指南:從 rId 到二進位資料的探險rid ; part = doc.part.rels[rid].target_part #return part.blob if “ImagePart” in type(part).__name__ else None](https://i2.wp.com/savingking.com.tw/wp-content/uploads/2026/01/20260113135812_0_8fa645.png?quality=90&zoom=2&ssl=1&resize=350%2C233)

![Python socket連線出現[WinError 10049] 內容中所要求的位址不正確 cmd.exe: ipconfig/all ; TCP/IPv4 vs IPv6 Python socket連線出現[WinError 10049] 內容中所要求的位址不正確 cmd.exe: ipconfig/all ; TCP/IPv4 vs IPv6](https://i0.wp.com/savingking.com.tw/wp-content/uploads/2022/10/20221028151556_42.png?quality=90&zoom=2&ssl=1&resize=350%2C233)

近期留言